RESEARCH BRIEF

Introduction

Companies like Microsoft, Google, Amazon, Meta, Samsung and many others have collectively invested more than $1 trillion into artificial intelligence technology and development, underscoring AI's rapidly growing significance across industries. With AI-driven revenues projected to surpass $126 billion in 2025 alone, the financial and technological landscape is undergoing transformative shifts.

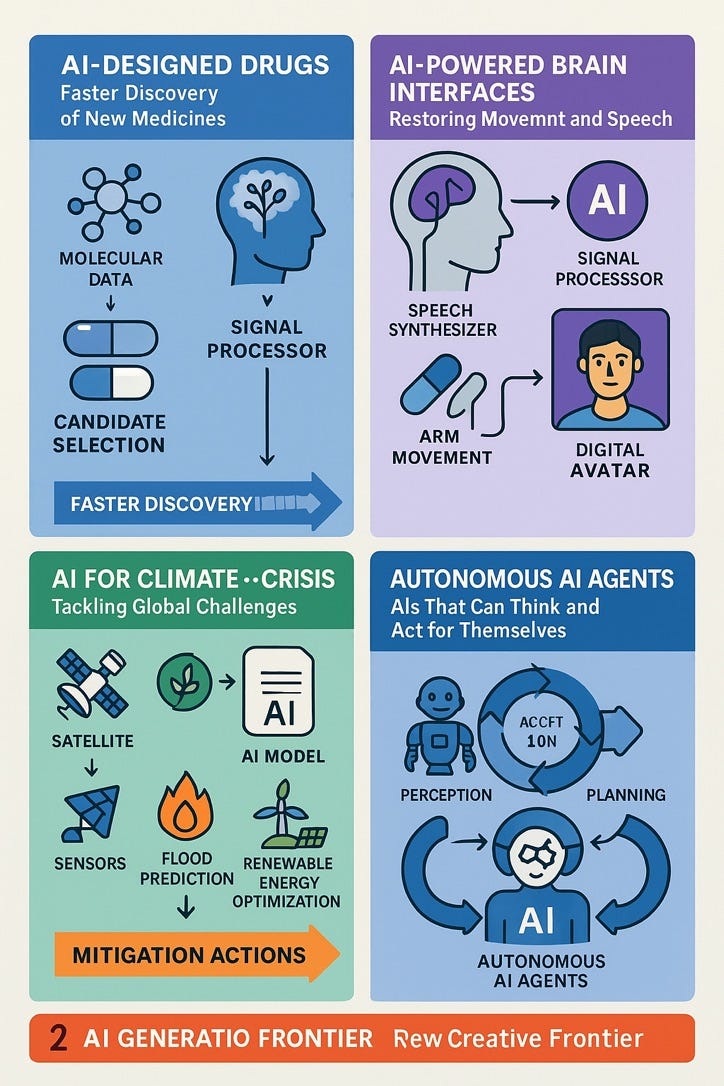

Artificial Intelligence (AI) is rapidly moving from research labs into the fabric of everyday life. Over the next 3–5 years, AI advancements are poised to transform how we work, learn, and manage daily tasks. Unlike past technological shifts, this AI wave is distinguished by systems that can understand context, act autonomously, and reason through complex problems. Three breakthrough developments are driving this transition toward more general AI capabilities:

Massive Context Windows: New AI models can read and retain far more information at once than ever before.

Autonomous AI Agents: AI programs are emerging that can plan and execute tasks in multi-step workflows without constant human prompting.

Emulation of Rational Thinking: Researchers are teaching AI to perform aspects of human reasoning – such as logical planning and symbolic problem-solving – moving closer to artificial general intelligence (AGI).

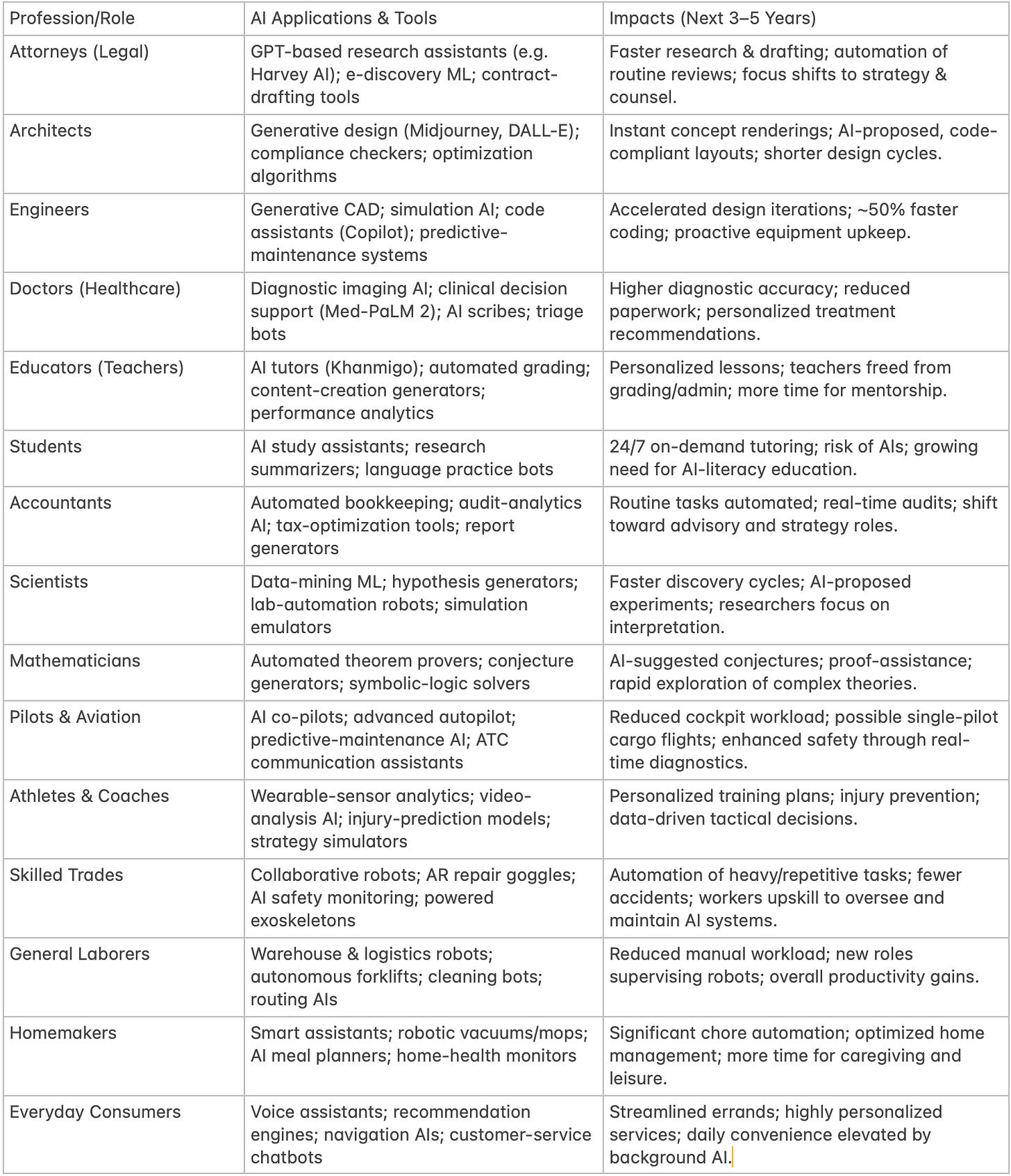

Each of these breakthroughs will amplify AI’s impact across a wide range of professions and social roles. In this report, we explore concrete examples and near-term projections (3–5 years, with glimpses further out) of how AI will reshape work for attorneys, architects, engineers, doctors, educators, students, accountants, scientists, mathematicians, pilots, athletes, skilled tradespeople, homemakers, laborers, and more. The focus is on practical applications and credible forecasts rather than abstract theory. We also include summary tables to organize the anticipated impacts by role.

Major Technological Breakthroughs Enabling the Next AI Leap

Large Context Windows: AI That Can Read 50 Books at Once

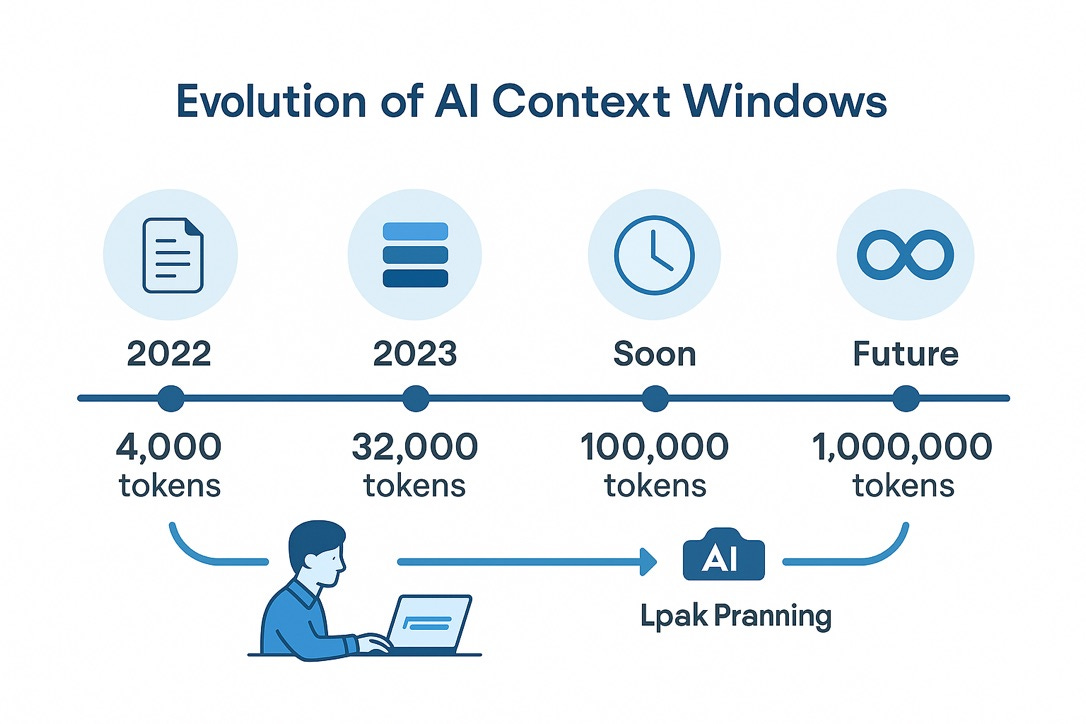

One fundamental leap is the expansion of context windows for large language models. The context window is essentially an AI’s short-term memory – how much text it can absorb and reason about at one time. Early versions of ChatGPT in 2022 had a limit of around 4,000 tokens (roughly a few thousand words). Today, cutting-edge models have context windows of 32,000 tokens or more, and the industry is already pushing to 100,000+ tokens (the length of a 250-page book). This means an AI can ingest entire documents, long transcripts, or multiple chapters in one go without losing track.

Within the next few years, context sizes will likely expand into the millions of tokens. In fact, OpenAI’s latest GPT-4.1 model can process up to 1 million tokens at once, roughly equivalent to 750,000 words. To put that in perspective, a million tokens could encompass dozens of books or an entire corporate knowledge base in a single query. With such capacity, an AI assistant could read a huge volume of contracts, medical records, or scientific literature concurrently, synthesizing information across them.

Larger context windows enable much deeper comprehension and fewer interruptions for summarization. An AI could retain context across very long conversations or documents, producing more coherent and relevant responses. For example, lawyers might feed thousands of pages of case law into one prompt for analysis, or scientists could have an AI review an entire stack of research papers at once. This breakthrough unlocks holistic understanding – the ability to connect dots across many sources – which was previously out of reach when AIs had to think in short snippets.

Of course, simply dumping massive data into a prompt isn’t a silver bullet. Too much information can be overwhelming even for an AI, which may focus on the beginning or end of a long prompt and miss details in the middle. Researchers note that quality of information and targeted prompts still matter more than sheer quantity. Nonetheless, the advent of million-token contexts marks a pivotal step. It is the difference between an AI with the “attention span” of a pamphlet versus one that can digest an entire library. In practical terms, this will supercharge professions that deal with large volumes of text or data.

Autonomous AI Agents: From Chatbots to Digital Colleagues

Another game-changing development is the rise of autonomous AI agents – AI systems that act on objectives, self-direct, and interact with other software/tools. Traditional AI assistants (like standard chatbots) are reactive, responding only when a human gives a prompt. By contrast, autonomous agents can be given a high-level goal and then proactively break it into sub-tasks, generate plans, and execute those plans, querying other AI models or using apps as needed.

In 2023, experimental projects like AutoGPT and BabyAGI showcased this concept. AutoGPT, for instance, wraps an LLM (GPT-4) with a loop that lets it ask and answer its own questions repeatedly until a goal is achieved. Essentially, the AI can generate a plan, carry out an action (like calling an API or browsing the web), evaluate the result, adjust the plan, and continue – all with minimal human input. The AI becomes its own taskmaster. As a simple example, when one user instructed AutoGPT to “help me grow my flower business,” the agent autonomously developed a plausible marketing strategy and even built a basic website to attract customers. This was done by the AI calling web services, generating content, and iterating on the plan, all on its own.

Such autonomous agents are still rudimentary (often “as deep as a puddle” despite being wide-ranging), but they hint at AI systems that could function more like a junior colleague than a static tool. In the next few years, we can expect personal AI assistants that don’t just answer questions, but take actions on our behalf: scheduling meetings, researching and drafting reports, monitoring projects, and handling routine decisions – all while we focus on higher-level direction. These agents will likely integrate with our software ecosystems (email, browsers, databases, etc.), effectively becoming digital employees or co-pilots for knowledge work.

Crucially, the improvement in context windows discussed above also makes autonomous agents more effective. With more context, an AI agent can “remember” the entire state of a complex task or conversation and handle more elaborate goals. Likewise, breakthroughs in reasoning (next section) make their planning more reliable. Tech companies are racing to enhance these capabilities – for example, OpenAI noted that the long-context version of GPT-4.1 significantly boosts performance when powering AI agents. By 2025 and beyond, autonomous agents could be assisting in everything from finance (monitoring markets and executing trades within set limits) to personal life (managing smart homes, shopping, or even offering companionship).

While full autonomy raises new challenges (safety checks, error handling, ethical boundaries), the trend is clearly toward AI that does things, not just says things. This has profound implications for productivity: routine workflows can be delegated entirely to AI, freeing humans to concentrate on strategy, creative work, or interpersonal aspects that AI can’t handle.

Emulating Reasoning and Human-Like Thought Processes

The third breakthrough is more conceptual but arguably the most important on the road to AGI: teaching AI to think in more human-like, rational ways. Today’s most powerful AI models are extremely adept at pattern recognition and generating content, but they don’t truly understand logic or causality the way humans do. They can also be brittle in reasoning – for example, making arithmetic mistakes or failing at multi-step planning unless carefully guided. Researchers are addressing this by integrating explicit reasoning mechanisms into AI systems, combining symbolic logic with neural networks.

One approach is to have AI generate step-by-step chains of thought internally (often called “chain-of-thought prompting”). Instead of spitting out an answer directly, the AI is prompted to work through the problem like a student showing their work. This can dramatically improve accuracy on complex reasoning tasks, as the model can correct itself along the way. Another approach is creating hybrid AI architectures (neuro-symbolic AI) that use rule-based components for logic and neural nets for intuition. For instance, a system might use a symbolic planner to outline a multi-step plan and a language model to fill in the details, marrying analytical precision with generative flexibility.

Researchers argue that such hybrid reasoning is a promising path toward AGI. By incorporating symbolic reasoning, planning, and explicit logical rules, AI can overcome some limitations of pure deep learning, like poor generalization or lack of transparency. In practical terms, this means future AI could handle tasks requiring rigorous thinking – e.g. verifying the steps of a mathematical proof, debugging complex code by logically tracing execution, or making legal arguments grounded in statutes and precedent.

We already see early signs: some AI models can now solve math word problems and even prove simple theorems by internally simulating reasoning steps. And tools like OpenAI’s function calling and plugins framework allow an AI to use external knowledge bases or calculators when logical precision is needed (for example, calling a solver API for math). Over the next few years, we’ll see “reasoning-enhanced” AI systems that are far less likely to make nonsensical errors, because they can plan, introspect, and correct themselves during problem-solving. Terms like Systems 2 thinking(deliberative, logical thought) are being invoked as AI’s next frontier, complementing the intuitive leaps of deep learning (Systems 1).

In summary, AI is gradually moving from being a savvy auto-completer of text to something more akin to a rational agent. It won’t equal human common sense in 5 years, but it will get much closer in its ability to handle abstractions and chained reasoning. This will make AI far more reliable in high-stakes domains (like diagnosing a patient or flying a plane) where step-by-step correctness is critical.

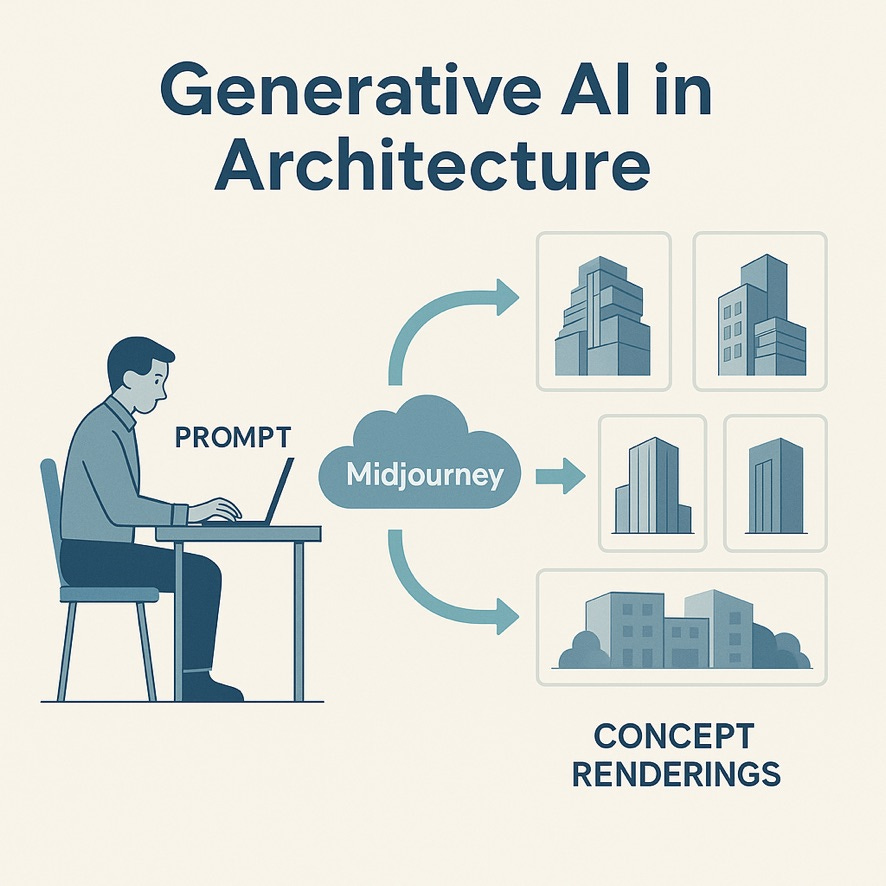

Example: Generative AI can rapidly visualize designs. Architects now use tools like Midjourney to generate concept renderings in seconds, iterating on ideas that meet project parameters.

Impacts of AI Across Professions and Everyday Roles

With these technological catalysts in place, AI’s real-world impact will span virtually all fields. In the following sections, we break down the concrete applications and changes expected in various professions and social roles. Each subsection highlights near-term examples (what’s happening now and next) and future implications (looking 5+ years out). A summary table is provided at the end for quick reference by role.

Legal Profession (Attorneys and Paralegals)

Lawyers are already leveraging AI to streamline legal research, document review, and drafting. Large language models with legal training can sift through case law, summarize precedents, and even compose first drafts of contracts or briefs. For example, a platform called Harvey AI (built on GPT-4) has been adopted by dozens of major law firms to automate aspects of contract analysis and due diligence. Harvey can ingest thousands of pages of legal documents, flag key clauses or inconsistencies, and answer lawyers’ questions in natural language – all with citations to the source material. More than 60 international law firms were already utilizing such generative AI services by 2024, a number that has only grown since.

In practice, an attorney might use an AI assistant to research a legal question: instead of spending hours digging through books or databases, they could ask the AI to find relevant cases and get a well-structured summary of opinions with pinpoint citations. This is becoming possible due to large context windows – e.g. an AI can take a query and internally reference an entire corpus of case law. Early pilots have shown promising results; in one test, a GPT-based tool answered landlord/tenant legal questions so well that attorneys said they would send out 86% of its answers with minimal or no editing. Such tools are not perfect and still require human oversight (especially to catch subtle errors or “hallucinated” citations), but they dramatically cut down the grunt work.

Another application is e-discovery in litigation. AI can rapidly review millions of emails or documents during discovery, identifying relevant evidence much faster than paralegal teams, and with fewer misses. This has been partially done with older tech (keyword-based tools), but modern AI’s ability to understand context and nuance makes it far more effective. Law firms are also deploying AI for document drafting. For instance, a firm might use a custom GPT model (trained on its past briefs and style) to generate a first draft of a motion, which the lawyer then fine-tunes. This flips the task from writing from scratch to editing and guiding the AI – a significant productivity boost.

In the next 3–5 years, we will likely see AI co-counsel as a standard part of legal teams. Routine work like contract initial drafting, basic patent applications, or due diligence reports on mergers could be largely AI-generated, with lawyers focusing on reviewing and providing judgment. Natural language queries to legal databases will feel like talking to an expert colleague who instantly recalls every case ever decided. This could also democratize legal services – for simple matters, individuals or small businesses might get AI-generated legal advice or documents at low cost, narrowing the “justice gap” where full legal representation is too expensive.

Looking further out, as AI reasoning improves, one can imagine AIs handling more complex tasks: drafting sophisticated litigation strategies or even interacting in court in some capacity (though ethical and legal barriers mean AI “lawyers” arguing in court are likely more than a decade away, if ever). However, some countries might start allowing AI-driven adjudication for minor disputes or AI mediators in negotiation settings. Legal professionals’ roles will shift to emphasize oversight, counsel, and ethics – ensuring the AI’s output is correct, relevant, and just. The value of human lawyers will concentrate in tasks requiring empathy, persuasion, and bespoke strategy, while a lot of the “heavy lifting” of law (reading, writing, analyzing) can be offloaded to AI.

Importantly, law is an area where explainability and trust are paramount – AI outputs need clear sources. We expect legal AI tools to increasingly provide detailed citations and reasoning paths for every answer, which is already a focus of AI legal startups. As one example, Harvey AI partnered with OpenAI to create a custom case law model that cites all its sources and was preferred by lawyers 97% of the time over vanilla GPT-4 in tests. This trend will continue, making AI a credible assistant in the eyes of bar associations and courts.

Architecture and Engineering

Architects and engineers are embracing AI to enhance design creativity, accuracy, and efficiency. In architecture, generative design and visualization tools are transforming how concepts are developed. Architects now use text-to-image generative AIs (like Midjourney, DALL-E) to instantly create photorealistic renderings of buildings from simple prompts. For instance, by typing a description of a building’s style, setting, and materials, an architect can generate dozens of conceptual images in hours, exploring ideas far faster than via hand sketches or traditional CAD mockups. These AI-generated images can be remarkably detailed – a recent update of Midjourney produced renders so sharp and realistic that clients could be fooled into thinking they were actual photographs of built structures. This helps architects iterate designs and communicate visions to clients early, accelerating the creative phase.

Beyond pretty pictures, AI is getting integrated into CAD and BIM (Building Information Modeling) software to assist with technical design. Generative design algorithms allow engineers to input goals and constraints (e.g. a bridge that supports X load with minimal material, or a building layout that maximizes natural light and meets code) and then have the AI produce optimized design options. This has already been used in engineering – for example, Airbus employed generative AI to design a partition with an intricate “organic” structure that was lighter yet strong, something a human might not have drafted. In construction architecture, AI-driven generative design can suggest floor plans that meet both aesthetic and regulatory requirements in seconds. According to a Construction Today report, architects/engineers can feed in constraints like materials, budget, and building codes, and let AI propose numerous viable design configurations. The AI essentially automates the trial-and-error process, presenting human designers with a menu of data-backed options.

Engineers in various fields are also using AI for simulation and optimization. Structural engineers use ML models to predict how complex structures will behave under stress, supplementing traditional physics simulations to catch potential issues faster. Mechanical and aerospace engineers leverage AI to optimize shapes for aerodynamics or to design components that an AI finds optimal given performance criteria (sometimes resulting in unconventional but effective designs). Electrical engineers are seeing AI assist in circuit board design and chip layout – even major chip companies are applying AI to arrange billions of transistors more efficiently than human experts.

For software engineers, AI has already become a powerful coding companion. Tools like GitHub Copilot (powered by OpenAI Codex) can autocomplete chunks of code or generate entire functions from comments. Developers report substantial productivity gains – in controlled studies, those using AI coding assistants completed tasks up to 55% faster on average. These assistants not only speed up writing code but also help with debugging and explaining code. Surveys have found that programmers feel more “in the flow” and focused when using AI helpers; a McKinsey study noted software developers experienced a 39% increase in time spent in flow state when leveraging generative AI coding tools. Over the next few years, we’ll see AI deeply integrated into IDEs (Integrated Development Environments): auto-generating boilerplate, suggesting improvements, and even managing entire project scaffolding. Some foresee a future where a single engineer, with AI pair-programmers, can accomplish what used to require a team.

In civil and construction engineering, AI and robotics are addressing labor shortages and safety. Autonomous construction machines (like AI-guided bulldozers or bricklaying robots) are in development to handle repetitive or hazardous tasks. By 2025, up to 30% of construction work could be automated according to BCG projections – for example, robotic systems are already capable of bricklaying, welding, and even drywall installation with speed and precision exceeding human workers. These systems use computer vision and sensors to operate on sites, handling tasks like laying bricks in perfect order or welding seams consistently. While full automation of construction isn’t here yet, in the next 5 years we expect to see semi-autonomous teams: a few human supervisors on-site overseeing robotic laborers for the heavy lifting and mundane work, improving productivity and safety. AI can also enhance site management by analyzing project data to predict delays or optimize scheduling, and by monitoring safety (identifying if workers are in danger or not wearing protective gear, via cameras).

Overall, the engineering fields will benefit from AI taking over tedious calculations, simulations, and design iterations. Human engineers and architects will spend more time on high-level creative and critical decisions – deciding among AI-generated options, fine-tuning designs for human preferences, and addressing the social/environmental context that AI might miss. There’s also a sustainability angle: AI can help find design solutions that minimize waste and energy usage (e.g. optimizing a building’s energy model or finding a structure that uses less material for the same strength).

In the farther future, architects might work with AI co-designers that can even handle multi-modal inputs – imagine sketching a floor plan by hand while an AI interprets it and generates full 3D models in real-time, or an AI that checks every proposed design change against all building codes and regulations instantly (no more code violations in plan reviews). Engineers might rely on AI for continuous monitoring of infrastructure: for instance, AI systems that watch sensor data from bridges, aircraft, or pipelines and predict maintenance needs or detect anomalies (some of this is already happening in “smart infrastructure” projects). The profession will increasingly blend human creativity with AI-driven analytical power.

Healthcare and Medicine (Doctors & Nurses)

Healthcare is witnessing some of the most consequential AI applications, aimed at improving diagnosis, treatment, and patient care efficiency. Medical AI systems are already diagnosing conditions from images and data with remarkable accuracy. In radiology, for example, AI image analysis tools can detect abnormalities in X-rays, MRIs, and CT scans often as well as or better than human specialists. One study on chest X-rays showed an AI system achieved a 99.1% sensitivity in identifying abnormal radiographs, compared to just 72.3% for radiologist reports. In other words, the AI missed far fewer issues, catching subtle signs that humans overlooked. When used together, the radiologist plus AI combination tends to outperform either alone, indicating that AI can serve as a high-performance second reader that boosts overall accuracy and consistency in medical imaging. Over the next few years, it’s expected that AI assistance will become standard in radiology workflows – AIs triaging scans (prioritizing the most urgent cases), flagging suspected tumors or fractures for review, and even generating preliminary reports for the radiologist to verify. This can greatly speed up diagnosis, critical in emergency and resource-limited settings.

AI is also proving valuable in pathology (analyzing microscopic tissue slides), where it can identify cancerous cells or classify tissue types rapidly. Dermatology apps can look at a photo of a skin lesion and assess the likelihood of melanoma with high sensitivity. In ophthalmology, DeepMind’s algorithms at Moorfields Eye Hospital in London have diagnosed certain retinal diseases from OCT scans as accurately as top eye doctors, allowing earlier detection of conditions like diabetic retinopathy.

Perhaps even more impactful in the near term is AI in clinical decision support. Large language models specialized in medicine (like Google’s Med-PaLM 2) can answer medical questions and suggest diagnoses from symptoms. Med-PaLM 2 recently achieved an 86.5% score on US Medical Licensing Exam (USMLE) style questions, placing it at an “expert doctor” level on those benchmark tests. This indicates that AI has begun to encode vast medical knowledge and can reason through clinical scenarios. We’re likely to see doctors consulting AI systems for difficult cases: for instance, inputting a complex patient history and lab results and asking the AI for a differential diagnosis or treatment options (with evidence from medical literature). Such systems might surface rare diseases a doctor didn’t consider or flag dangerous medication interactions by cross-referencing huge databases.

In the day-to-day, AI assistants could reduce doctors’ administrative burdens. Natural language processing can transcribe and summarize doctor-patient conversations (eliminating time spent on writing charts). Companies are developing AI “scribes” that listen in the exam room (or on telehealth calls) and automatically produce the visit note and even draft orders or referral letters. This frees physicians to focus more on the patient than the paperwork. Similarly, AI chatbots are handling routine patient inquiries and triage. For example, a chatbot might intake a patient’s symptoms via an app and advise whether they should seek care and with what urgency, or it might coach chronic disease patients through daily management (e.g. an AI diabetes coach reminding patients to check blood sugar, analyzing their readings, and giving tailored advice).

Another area is personalized treatment and drug discovery. AI can analyze a patient’s data (genetics, history) to help choose the best treatment – like predicting which cancer therapy a patient will respond to, based on patterns learned from thousands of prior cases. On the pharma side, AI is accelerating the discovery of new drugs. Notably, in 2023 researchers used deep learning to identify a new class of antibiotic compounds that can kill resistant bacteria (like MRSA and A. baumannii), a task that traditionally took years of trial-and-error. This AI-discovered antibiotic (one example is a compound named abaucin identified for A. baumannii) offers hope against superbugs and showcases AI’s ability to sift through chemical space for promising molecules. In the next few years, expect more drug candidates coming out of AI screening pipelines, entering clinical trials – potentially leading to treatments for diseases that lacked them.

For clinicians, the 3–5 year horizon will involve integrating AI into the clinical workflow carefully. Many hospitals will employ AI for predictive analytics – e.g. predicting which patients are at risk of deteriorating (by analyzing vital signs and lab trends in real time) so that staff can intervene early. AI can also optimize operations: predicting ER admission surges, optimizing staff schedules, or identifying which patients can be safely discharged to free up beds.

By the latter half of the decade, routine diagnostics might be heavily automated: for instance, an AI system might automatically read and interpret an incoming chest CT, compare it with the patient’s previous scans and history, and generate a full report for a radiologist to sign off. Primary care doctors might rely on AI to monitor their patient panels – with AI screening electronic health records and alerting, say, if a diabetic patient hasn’t had a key test or if someone’s data indicates they might be becoming depressed. In surgery, robotic systems with AI are already assisting surgeons (for precision tasks or even autonomous actions in constrained tasks like suturing); this will advance further, though human surgeons will firmly remain in control for the foreseeable future.

The doctor-patient relationship will remain at the heart of care, but AI will act as an ever-present support, much like a super-resident or expert consultant always available in the background. One challenge will be ensuring the AI’s advice is trustworthy and unbiased. It’s critical that clinicians understand the recommendations and have final say, to avoid over-reliance on a tool that can sometimes err. Regulatory bodies like the FDA are actively working on frameworks to approve and monitor medical AI systems to ensure safety and efficacy.

Education (Teachers and Students)

Education stands to be profoundly influenced by AI, with changes already underway in both teaching and learning. Educators are leveraging AI as a teaching assistant and content creator, while students are embracing AI tutors (and grappling with academic integrity issues that come with them).

For teachers, one of the most promising developments is AI-powered tutoring and personalized learning platforms. For instance, nonprofit Khan Academy is piloting an AI tutor named Khanmigo, built on GPT-4, which can interact with students in a Socratic dialogue, guiding them to answers instead of just giving solutions. Khanmigo also serves as a classroom assistant for teachers – it can help draft lesson plans, create practice problems, or even role-play as a student who needs help, so teachers can practice responding. Early feedback suggests that when used properly, AI tutors can keep students more engaged by tailoring questions to their level and giving immediate, constructive feedback. Over the next few years, we’ll likely see AI tutors become commonplace: imagine each student having access to a “personal AI study buddy” at home that can explain any concept from their textbook, step through math problems, or help them practice a foreign language conversationally. This 24/7 availability of one-on-one help could reduce disparities, as even students who cannot afford private tutors get some form of personalized instruction.

Teachers, on the other hand, can offload time-consuming tasks to AI. Grading and assessment can be partially automated – AI can grade multiple-choice and fill-in answers easily, and even evaluate essays for things like grammar, coherence, and argument structure. While teachers will still oversee grading (especially for high-stakes evaluations), AI can pre-score or highlight sections of an essay that need attention, making human grading much faster. AI text generation is also handy for creating teaching materials: a teacher could ask an AI to generate a set of quiz questions on a chapter, or produce examples and analogies to use in class. Some educators use AI to get differentiated materials – for example, “Explain the law of conservation of energy at a 5th-grade reading level” to have simpler explanatory text for struggling learners. Additionally, AI can help in lesson planning by suggesting activities or multimedia resources for a given topic (scouring the web or knowledge bases and summarizing ideas).

A notable experiment is happening with curriculum chatbots – some schools are introducing AI-driven chatbots in the classroom that students can ask for help when the teacher is busy. Rather than replacing the teacher, it’s like giving teachers an army of virtual teaching aides so no student’s question goes unanswered. However, teacher training is crucial here: educators need to learn how to best integrate AI into their teaching strategies (and how to verify the AI’s responses, since it can sometimes be wrong). Surveys show many teachers remain cautious but optimistic, seeing potential for AI to reduce burnout by taking over bureaucratic tasks (attendance, grading, making reports).

From the student perspective, AI is a double-edged sword. On one hand, students are enthusiastically adopting tools like ChatGPT to help with homework and studying. AI can explain tough concepts in different ways until the student “gets it,” much like a patient tutor. If a student is stuck on a calculus problem at 10 pm, an AI tutor can provide hints step by step. If they don’t understand a passage in Shakespeare, an AI can paraphrase it in modern language and then gradually elevate it back to the original text, helping comprehension. Adaptive learning software, powered by AI, can identify a student’s weaknesses (say, specific math skills) and adjust practice problems to focus on those, something that used to require meticulous work by teachers. Studies have found that well-designed adaptive learning systems can significantly improve mastery by pacing the material to each learner’s needs.

On the other hand, the ease of getting AI-generated answers has sparked concerns about cheating and learning integrity. Already in 2023, schools saw cases of students submitting AI-written essays. Educators have responded with a mix of policies: some ban AI use outright for assignments, while others incorporate AI and teach students to use it responsibly (e.g. using AI to brainstorm and outline, but requiring students to write the final essay, or to disclose AI assistance). By necessity, there will likely be a new emphasis on oral exams, in-class writing, and project-based assessments to ensure students actually learn the material, not just have AI do their homework. We’re essentially adding a new literacy: AI literacy, meaning students need to learn how to prompt effectively, critically evaluate AI outputs, and use AI as a tool rather than a crutch.

In the next few years, it’s plausible that standardized tests themselves might change – perhaps open-book and even open-AI exams, focusing less on regurgitating facts (since AI can do that) and more on critical thinking and problem-solving in collaboration with AI. Forward-thinking curricula may include assignments like “Use an AI to help you research this topic, but you must verify the sources and add your own insights,” thus testing students’ ability to fact-check and deepen AI output.

Another emerging use is AI for special education and language learning. AI speech agents can help children with autism practice social conversations in a safe, patient environment. Language learners can chat with an AI in the language they are learning, receiving corrections and cultural notes – like an ever-available language partner. These applications make learning more accessible and personalized.

Administratively, schools and universities will use AI to predict which students might be at risk of failing or dropping out (by analyzing patterns in performance and engagement), allowing early interventions. AI might also optimize scheduling (of classes, buses, etc.) to improve efficiency.

All these changes come with challenges: ensuring equity (not all students may have access to the latest AI – schools might need to provide it), maintaining privacy of student data, and retraining teachers. However, the consensus is that if used well, AI can augment education by providing personalization at scale – something that traditional one-size-fits-all teaching has struggled with. In a study, education experts described the vision as “every student having an AI-powered tutor and every teacher having an AI assistant”, which could dramatically improve learning outcomes if properly aligned with educational goals.

Notably, even educational content creation is being impacted. Textbook publishers and edtech companies are infusing AI to create interactive books that answer students’ questions, or even to generate new problems on the fly. By 2030, a “classroom” might often involve students each engaged with their own AI-optimized learning path for part of the day, while teachers facilitate group discussions, projects, and ensure social-emotional learning – aspects AI cannot replace.

A lineup of new humanoid robots, from various companies (Boston Dynamics, Tesla, Agility, 1X, etc.), highlights the push toward general-purpose machines. These prototypes, rolling out between 2023–2025, aim to assist with labor tasks and even household chores.

Finance and Accounting (Accountants & Analysts)

The finance sector is experiencing a wave of AI-driven automation, particularly in accounting, auditing, and financial analysis. Accountants are using AI to automate routine, time-consuming tasks, allowing them to focus more on strategy and advising clients. For example, AI-powered software can automatically categorize expenses, reconcile accounts, and flag anomalies in financial records. What used to involve hours of manual data entry – like reading bank statements or invoices and entering them into ledgers – can now be handled by machine learning models that recognize patterns and even detect errors (such as a double billing or an out-of-pattern purchase that might indicate fraud). According to industry reports, firms that adopted AI for these processes see fewer errors and significant time savings, as the AI can process documents many times faster than a person.

In auditing, the Big Four accounting firms have been early adopters of AI. Auditors use AI to analyze entire data sets rather than samples, making audits more thorough. For instance, EY has embedded AI to analyze and extract information from unstructured documents (like contracts or leases) and to test transactions at scale for irregularities. This means an AI might go through every single transaction a company made in a year (millions of entries) and cross-check them against receipts, looking for any that don’t match – something impossible to do fully manually. Such AI tools can also be fed industry data and regulatory rules, helping auditors identify potential risk of material misstatements or fraud by comparing a company’s financial patterns to expected norms. Deloittedeveloped an AI document review platform that evaluates contract provisions across a company’s entire contract repository, which hugely speeds up due diligence in mergers.

Accounting firms also use AI in forecasting and analysis. Machine learning models can forecast cash flow or demand more accurately by finding complex relationships in historical data that basic spreadsheet models might miss. Investment analysts are increasingly using AI to parse market data, news, and even earnings call transcripts – JPMorgan famously has an AI that can decode Federal Reserve statements for sentiment to anticipate market reactions.

A big shift is the introduction of generative AI to produce reports and insights. PwC, for example, gave its employees an enterprise ChatGPT tool to help draft audit reports and technical documentation; they reported a 20% to 50% productivity improvement in certain development and documentation processes with these internal AI tools. An accountant can have an AI draft a first version of a financial analysis or client report, including charts and explanations, which the accountant then reviews and tweaks. This cuts the tedious blank-page writing time and ensures consistency. It’s like having a junior analyst always on hand to assemble the pieces.

In the next few years, smaller accounting firms and even individual professionals will gain access to similar AI capabilities, either through software vendors or open-source tools. We might see “autonomous finance agents” that can monitor a company’s books continuously. Imagine an AI that not only updates the ledger in real time as transactions come in, but also sends alerts: “Liquidity is getting low compared to upcoming expenses, consider transferring funds or cutting costs,” or “This invoice looks irregular compared to past ones, I’ll flag it for review.” Such proactive insight can vastly improve financial management for businesses.

Tax preparation is another area ripe for AI. AI can handle the mountain of rules and exceptions in tax codes to optimize filings. For instance, it can analyze last year’s return and the year’s financial records to suggest deductions or alert to missing forms. Some advanced tax software use AI to double-check returns for errors or opportunities, reducing the chance of audits or penalties. In auditing as well, AI might evolve from just finding issues to fixing them – e.g. automatically proposing adjusting entries to correct errors it finds.

Financial advisors may use AI to personalize investment advice. Robo-advisors already allocate assets based on algorithms, but with new AI, they could have more nuanced conversations with clients (via chat or voice) to understand their goals and risk tolerance, then educate them and adjust portfolios accordingly. The human advisor’s role would shift to overseeing these AI recommendations and managing client relationships, rather than doing all analysis solo.

One interesting development to watch is AI in fraud detection and compliance. Banks have long used algorithms to spot credit card fraud (anomalous transactions), but AI can enhance this by combining more data sources and context. In the corporate world, AI tools are being used to detect internal fraud or waste – for example, flagging if an employee submits the same expense twice under different categories, or if procurement prices seem off compared to market rates. Compliance departments are using AI to monitor communications (emails, chat) for any signs of insider trading or policy violations, a task that manual compliance officers could never scale to all communications.

By 2030, we can imagine much of the mechanical work of accounting being almost fully automated. The month-end close process might become continuous, with AI reconciling ledgers every minute. Audits could be “real-time” – AI auditing transactions as they occur, so annual audits become more a formality. The financial close and reporting for corporations could be done in a day with AI, versus several days or weeks currently. Governments might even require certain AI-driven checks to ensure compliance and reduce the chance of corporate scandals, given AI’s ability to catch issues early.

For finance professionals, the emphasis will move to interpretation, strategic decision-making, and client service. The number-crunching and bookkeeping will largely be unseen in the background, done by AI. But human judgment will remain vital – someone needs to decide what to do with the insights the AI provides, to approve significant adjustments, and to handle complex cases that don’t fit patterns. Moreover, while AI can tell you what is, it might not fully grasp whyor what should be done in a business context; that’s where accountants as advisors step in.

Trust and transparency will be key: just as with other fields, finance AI will need to explain its findings (especially for audits – auditors need to defend their audit to regulators). We expect AI in this sector to come with strong documentation, logs, and controls to satisfy regulatory standards like Sarbanes-Oxley.

Science and Research (Scientists & Mathematicians)

Scientists across disciplines are harnessing AI to accelerate discovery and handle the deluge of data modern research generates. In the life sciences, AI has already revolutionized areas like protein folding and drug discovery. A landmark achievement was DeepMind’s AlphaFold, which in 2022 predicted the 3D structures of ~200 million proteins (essentially all proteins known to science) and released them in a public database. Determining protein structures experimentally used to take months or years for each one; AlphaFold provided a treasure trove in a matter of months, allowing biologists worldwide to understand proteins of interest without needing to do costly lab crystallography. This has turbocharged fields like structural biology and drug design – researchers can now model how a protein (say from a virus or cancer cell) looks and then design drugs that fit into its pockets. In the next 5 years, we’ll see that these AI-predicted structures lead directly to new medications (already companies are using AlphaFold outputs to identify targets for new drugs).

AI is making waves in chemistry and materials science as well. Machine learning models can predict properties of molecules or materials (like reactivity, strength, conductivity) before they are synthesized, guiding scientists on what to make next. Generative models can even propose novel molecular structures with desired properties – “inverting” the usual trial-and-error approach. For example, given a target like “a compound that kills bacteria X but is not toxic to human cells,” generative AI can suggest candidate molecules, which are then tested in the lab. This process helped identify a new antibiotic (reported in early 2024) effective against Acinetobacter baumannii, a superbug. The AI screened hundreds of millions of molecules in silico to find a few that traditional methods missed, one of which (now called zosurabalpin) is undergoing further development. Such AI-guided discovery shortens the drug development timeline by focusing experimental efforts on high-probability hits.

In physics and engineering research, AI assists with analyzing complex simulations and experiments. For instance, in particle physics, AI algorithms sift through huge volumes of collision data to spot rare events that might indicate new particles. In astronomy, machine learning is used to classify galaxies, find exoplanets in telescope data, and even detect gravitational waves signals amid noise. These tasks are like finding needles in haystacks, and AI’s pattern-recognition prowess is invaluable. As telescopes and experiments become more powerful, the data scales up (e.g., the Square Kilometre Array telescope will produce enormous data streams); only AI-level automation can handle initial processing and flag interesting phenomena for scientists to examine.

A particularly fascinating area is AI assisting in pure mathematics and theoretical research. Normally, one wouldn’t think an algorithm could make creative insights in math, but it’s starting to happen. DeepMind collaborated with mathematicians to apply AI in conjecture discovery – notably, their AI helped uncover a surprising connection between algebraic and geometric invariants in knot theory, leading to a new theorem being proved. It also assisted a mathematician in making progress on a 40-year-old conjecture in representation theory (related to Kazhdan-Lusztig polynomials). The AI did this by analyzing large data sets of examples and suggesting patterns that humans hadn’t noticed. In essence, AI can crunch through examples of a mathematical object and propose a hypothesis (conjecture) about them, which the human mathematician can then try to prove. This doesn’t replace mathematical rigor – a proof still requires human ingenuity – but it’s like providing an intuition or educated guess that might have taken decades to come by. Over the next few years, more mathematicians may use AI as a kind of super-research assistant: for exploring large combinatorial spaces or formulating conjectures in areas like graph theory, number theory, etc. We might see a cascade of new conjectures (some of which will be proven, some disproven) fueled by AI pattern-finding.

In climate and environmental science, AI is crucial for modeling and mitigation strategies. Climate models produce vast outputs; AI can help analyze and simplify these, identifying key factors or making faster predictive models. For example, AI can learn the behavior of complex climate simulations and then emulate them at a fraction of the computational cost, allowing more scenarios to be tested. In ecology, AI analyzes sensor data (like camera trap photos or audio recordings in rainforests) to monitor biodiversity, identify species, and detect illegal poaching or deforestation in real-time. These tasks would be impossible at scale without automation.

Another domain is neuroscience and brain-computer interfaces, where AI helps decipher neural signals. Brain imaging data or electrical recordings are extremely noisy and complex; machine learning is being used to find patterns that correlate with diseases or to help prosthetic devices interpret a person’s neural signals (e.g., allowing paralyzed patients to control a robotic arm by thought, with AI decoding their brain activity). In the next years, this will improve, potentially restoring more function to people with disabilities via AI-mediated interfaces.

Collaboration between humans and AI in research is likely to deepen. One can imagine something like an “AI research co-author” that is listed in papers as contributing analysis – indeed, we’ve already seen AI systems credited in some scientific publications for their role (for instance, in solving structures or analyzing data). The role of the human scientist may shift more to defining problems, designing experiments, and interpreting results in context, while letting AI handle brute-force data analysis or initial drafting of findings. Some journal papers are now being partially written by AI (e.g., to generate the first draft of the methods section, which a human then edits for correctness).

AI-Generated Media will probably the first to showcase “Digital Humans.”

Avatars that will exist on computer screens and holograms.

A potential future glimpse: A scientist might feed all relevant literature on a question into a large-context AI and have it generate a comprehensive review, highlighting gaps in knowledge. Then the scientist asks the AI to propose experiments to fill those gaps, maybe even simulate some outcomes. The scientist uses those suggestions to guide actual lab work. This interplay could drastically shorten the cycle of trial and error.

However, caution is warranted. Reproducibility and validation remain core to science – any AI-generated hypotheses or analyses must be verified through experiments or rigorous proofs. AI might introduce biases if it’s trained on biased data, something scientists will have to be mindful of (e.g., if an AI is trained on historical clinical trial data that lack diversity, its suggestions might not be universally valid). The scientific method won’t be replaced; AI becomes another set of tools to extend human capabilities, much like statistics or the microscope did in the past.

In sum, AI is becoming an amplifier for human intellect in research: reading more papers than any one person could, testing more possibilities, and crunching more data. As a result, the pace of discovery could accelerate in the coming years, potentially leading to breakthroughs (new materials, cures, technologies) sooner than expected. It’s even conceivable that AI might help solve some currently intractable problems by 2030 – for example, finding a viable room-temperature superconductor material through searching chemical space, or formulating a unifying theory in some area by analyzing mountains of data. If and when that happens, it will raise profound questions about credit and the nature of discovery, but that’s a good problem to have because it means progress.

Aviation and Transportation (Pilots & Drivers)

In aviation, AI is set to augment pilots and potentially reshape how aircraft are operated, though this will be a gradual and carefully regulated change. Modern airplanes already rely heavily on automation (autopilot, flight management computers, etc.), but upcoming AI will take this further into the realm of intelligent autonomy and decision support.

One of the bold ideas being explored is the concept of single-pilot or even pilotless commercial flights with AI taking on the role of a second pilot. Today, commercial airliners fly with two pilots (captain and first officer) to ensure redundancy and distribute workload. Aviation leaders have suggested that with advanced AI, the industry could move to having one pilot in the cockpit assisted by an “AI co-pilot” that never tires or gets distracted. Tim Clark, president of Emirates Airline, stated in 2023 that one-pilot planes are a real possibility thanks to rapid AI developments, urging the industry to “take time to look at what [AI] could do to improve what you do”. The idea is that an AI system could monitor all flight instruments, handle communications, and even execute standard procedures on its own, leaving the human pilot to oversee and handle exceptions.

In fact, the European aviation regulator (EASA) has been researching extended minimum crew operations (like single-pilot operations for cruise phase) with a timeline that might see some form of it late this decade (likely starting with cargo flights). The AI co-pilot would act like a highly skilled first officer: able to fly the plane in normal conditions and even land it autonomously in an emergency if the human pilot becomes incapacitated. Airbus conducted tests of an AI system that could taxi, take off, and land an aircraft without human input, and they were successful in 2020. Building on this, by 2025–2027 we might see cargo aircraft certified for single-pilot with ground-based monitoring or AI backup, as a proof of concept.

For passenger flights, regulatory and public acceptance issues mean that fully autonomous planes are likely further out (beyond 5 years). However, pilots will increasingly use AI as a support tool. This includes AI systems that can talk with Air Traffic Control (ATC) via natural language processing (relaying instructions or requesting changes), so the pilot can focus on flying. AI can also assist in route optimization: for instance, if there’s unexpected weather, an AI could quickly suggest the best new course or altitude to save fuel and avoid turbulence, considering far more variables in real-time than current flight management systems. Another help is in abnormal situations – say an engine failure or other malfunction – where AI can rapidly run through checklists, diagnose the problem (using sensor data), and recommend the optimal solution or even take corrective action immediately. This can reduce human error in emergencies, where pilots are under stress.

In aircraft maintenance and operations, AI will play a major role too. Predictive maintenance systems using AI analyze data from sensors on engines and parts to predict failures before they happen, allowing for proactive repairs and reducing downtime. This makes flights safer and more cost-efficient. Ground operations might use AI for air traffic management: some airports are testing AI to sequence takeoffs and landings more efficiently, reducing wait times and fuel burn.

For air travel passengers, one might see AI-driven improvements like personalized updates or even AI assistants at airports (some airlines are trialing robot customer service agents). But the most significant for passengers (though they may not notice) is safety improvements. Boeing and Airbus both are embedding more AI in flight control systems to assist pilots in avoiding hazardous situations (like preventing stalls or going off taxiways). Boeing’s upcoming designs, for example, are expected to integrate AI that learns from vast flight data to provide better alerts and possibly to take corrective action faster than current systems.

When we talk about other transportation roles like drivers (trucks, taxis) – autonomous driving on the road has been a hot topic. Self-driving car tech has proven harder than initially expected, but incremental progress continues. In the next 3-5 years, we may see more autonomous trucks on highways in pilot programs (with human safety drivers initially). Several companies are working on AI for long-haul trucking that can handle highway driving and then have humans do the tricky last miles in city streets. By late 2020s, it’s feasible that dedicated freight corridors see regular autonomous convoys. For taxis, companies like Waymo and Cruise already operate robo-taxis in limited city areas. These will expand to more cities and larger geofenced areas. This could affect professional drivers (taxi, rideshare, delivery) – not listed by the user but worth noting as an everyday role – where their jobs may shift to oversight or handling more complex routes while AI handles the simpler or high-demand ones.

For pilots specifically, an AI-rich cockpit might change training: pilots will need to learn to manage AI systems and trust them appropriately (but also know how to fly without them in rare cases). “Automation management” has been part of pilot training for decades, but now it will include understanding machine learning outputs, maybe even interacting via voice with AI (“Assist me with diversion options”). There could even be an AI instructor in simulators that watches a pilot trainee’s performance and gives adaptive feedback.

Looking further ahead, possibly beyond 5 years but glimpsed now, are uncrewed aerial vehicles (UAVs) for cargo or even passengers (drones and air taxis). Companies are making autonomous passenger drones (essentially flying taxis) that could ferry people in urban areas without a pilot on board. These rely on AI for navigation and safety (with remote human oversight). By late 2020s, some cities might introduce these on a trial basis, which will be a visible instance of AI in transportation for the public.

At sea, AI will similarly assist ship captains or even enable unmanned cargo ships on set routes (this is being tested too), and on rail, autonomous trains are already running in some places (like certain metro systems). So across transportation, the role of human operators is shifting from direct control to supervisory control. Pilots and drivers become more like mission managers, intervening only when needed. This can greatly increase efficiency (a single operator could oversee multiple autonomous vehicles, perhaps).

It should be emphasized that safety is the paramount concern. Aviation in particular moves cautiously – any AI system in the cockpit will be rigorously tested and likely initially only assist rather than fully control. There will be backup systems and the human will be the final authority for quite some time. Nonetheless, the trajectory is clear: AI is co-piloting more and more.

In summary, for pilots, AI means less menial workload and potentially smaller crews, but also a need to stay vigilant in new ways (monitoring an AI that’s doing the flying). A scenario often cited is that the “cockpit of the future” might have one human pilot and one AI, with a remote pilot on the ground as a backup. This could address pilot shortages and reduce costs, but only if shown to meet or beat today’s safety levels. Given that human error is a factor in many accidents, a properly designed AI assistant could indeed make flying even safer by catching human mistakes. For the flying public, these changes may be invisible or slow – but over time, flights might become marginally cheaper and more reliable as automation efficiencies kick in.

Sports and Athletics (Athletes & Coaches)

From professional athletes to weekend warriors, AI is increasingly being used to enhance training, strategy, and even the fan experience. Athletes and coaches are tapping into AI-driven analytics to gain a competitive edge and prevent injuries.

One major area is personalized training programs. Traditionally, training regimens are crafted by coaches using experience and some data (like past performance). Now, AI systems can analyze an athlete’s performance data in depth – including metrics from wearable sensors (heart rate, speed, acceleration, jump height, etc.), video of their play, and even biomechanical data – to tailor training down to fine details. For example, an AI might detect that a soccer player’s sprint speed declines in the last 15 minutes of a game and correlate it with their recovery patterns, suggesting specific endurance training or nutritional interventions. Data-driven platforms can optimize exercise selection, intensity, and volume for each athlete by learning what yields the best results for similar athlete profiles. An AI might tell a basketball player to focus on certain drills that have historically improved their shooting after analyzing thousands of practice shots.

In fact, AI systems now ingest sports-specific metrics to recommend training adjustments in real time. Consider a runner: AI could analyze stride length, ground contact time, and fatigue indicators from a smart insole, and then advise mid-run or mid-week to adjust pacing or form. Some running apps already use simple AI to adapt training plans on the fly if you miss workouts or if your heart rate data indicates overtraining. In elite sports, teams are adopting AI to monitor training load and prevent injury – by recognizing patterns (e.g., a subtle change in a pitcher’s throwing motion that often precedes a shoulder injury).

Injury prediction and prevention is huge. Professional teams gather loads of data on player conditioning and game exposure. Machine learning models can predict the likelihood of an injury if a player exceeds certain loads or if certain muscle imbalances are present. As a result, coaches can be alerted to rest a player or modify training before a serious injury happens. We’ve seen this in sports like cricket and NBA basketball where scheduling and rest recommendations are increasingly data-informed.

Skill analysis and enhancement is another focus. Computer vision AI can break down video of an athlete’s technique – be it a tennis serve, a golf swing, or a weightlifting movement – and compare it against ideal models or that athlete’s past self. The AI can then provide feedback as specific as “your elbow angle is 5 degrees off in the follow-through, which is reducing power.” There are apps for basketball that track your shots via your phone camera and give immediate feedback on arc, depth, and left-right deviation, helping players adjust their shooting technique. In soccer, companies use AI to analyze player movements in games to evaluate decision-making: for instance, did a player often miss seeing an open teammate to pass to? The AI can highlight those situations for the player to review and learn from.

Team strategy and tactics benefit from AI through advanced analytics. Sports have embraced “Moneyball”-style data analysis for years, but now AI can find more complex patterns. For example, in American football, AI can analyze formations and plays of opponents to identify tendencies far beyond human film study – like a certain lineman’s subtle stance meaning a pass play is coming 80% of the time. In football (soccer), coaches use AI to simulate opposing team behaviors and identify weaknesses in their formations. This leads to more informed game plans. Some teams even simulate games thousands of times with different tactical tweaks (like a chess AI exploring moves) to see which strategies yield the best outcomes, then practice those.

Even during games, AI is starting to assist. While live use is limited by rules, some sports allow real-time telemetry: for example, sailing or Formula 1 racing teams use AI to constantly compute optimal routes or car settings given conditions, feeding suggestions to the crew/driver. In Formula 1, AI helps analyze tire wear, fuel, and opponent behavior to decide pit stop timing – essentially strategy optimization in real-time.

For the broader category of “athletes” including casual athletes or fitness enthusiasts, AI is coming through personal fitness apps and devices. Smartwatches already give AI-driven insights (“Your recovery is low today, consider a light workout”). Expect these to become more nuanced: e.g., an app noticing you often get injured when upping mileage and proactively adjusting your running plan to ramp more slowly, or even detecting signs of health issues from subtle changes in your vitals during exercise.

Furthermore, sports entertainment uses AI to enhance how athletes and performances are understood. Broadcasters use AI to generate advanced stats (like expected goals in soccer, or probability of shot success given distance and defense in basketball). They can also create visualizations – for instance, showing the most likely path a golfer should take on a hole, or the movement patterns of a top player as an augmented reality on-screen. This informs audiences and also feedback loops to players who watch film.

By 3–5 years out, we might see everyday coaching integrating AI deeply. Many coaches (high school, college, pro) will have AI assistants that do the first pass of data crunching, producing a “report” after each game or practice. This leaves coaches to apply the human touch – motivating players, focusing on the mental game, and implementing changes AI suggests in a way that players buy in. Athletes themselves, who are increasingly data-savvy, will use AI as part of training – perhaps an app that listens to your baseball swing and tells you if your timing was off, or smart glasses that can project guidance while practicing (like a strike zone overlay for pitchers to aim at based on AI analysis of a batter’s weak spots).

Another aspect is talent scouting and development. AI can analyze video of young athletes to project their potential or fit for a team. This might reduce bias and help uncover overlooked talent by focusing on performance data. Conversely, athletes can use AI to create highlight reels or performance summaries that showcase their skills to scouts.

Longer-term, with more wearables, even in-game decisions for players could be guided. For instance, a quarterback might have an AI system that quickly draws from vast defensive footage to suggest the best receiver to target given the defense’s formation (though at the speed of a play, that’s tough – it may be more plausible in slower sports or breaks in play).

One must note, however, that sports also rely on human creativity, intuition, and psychology. AI might say “Player X has a 70% chance to score if they go right,” but the opponent might also know that pattern – so mind games come in. Thus, AI will be an adviser, not a dictator of decisions. The best athletes often thrive on unpredictability and adaptation, which AIs can learn from but not always replicate, especially when opponents also adapt.

Overall, athletes who embrace AI guidance could improve faster and potentially extend their careers (through injury prevention and recovery monitoring). On the flip side, those in sports who don’t leverage these tools might find themselves at a disadvantage in preparation and training efficiency. It’s similar to how video analysis became standard – now AI analysis will become standard.

At the recreational level, these enhancements mean people can train smarter, avoid injury, and perhaps enjoy sports more by seeing improvement. AI coaches for activities like yoga or weightlifting could correct form to prevent injury (some apps already use your phone camera to do rep counting and form checks). We may get to a point where everyone has access to advice that used to only be available from professional coaches.

Skilled Trades and Manual Labor (Skilled Workers & General Laborers)

AI and automation are not just for desk jobs – they are also transforming skilled trades, manufacturing, and other manual labor sectors. The impact here often takes the form of robotics and intelligent machines that work alongside humans or take over repetitive tasks, as well as AI-driven tools that enhance workers’ capabilities.

In manufacturing and warehousing, robotics with AI vision are becoming more flexible and capable. We already have robot arms that can sort products, assemble components, or weld consistently. Traditionally, robots did rote tasks in structured environments. Now, AI is giving them more “eyes and brains” to handle variability. For instance, an AI-powered robot can pick and pack items of various shapes by visually identifying them and figuring out how to grasp each one – something that used to be hard for automation. This is why Amazon warehouses use robots for fetching goods, and more advanced ones are expected that can individually handle items and fulfill orders. In factories, co-bots (collaborative robots) with AI can work safely next to people, doing heavy lifting or precision work while the human focuses on quality or complex assembly steps. AI ensures the robot adapts to the human’s pace and avoids collisions.

Skilled construction trades are seeing early automation too. Bricklaying robots can lay bricks much faster than humans for basic walls, with a human mason overseeing multiple robots (each robot still needs a person to manage feeding it bricks and mortar and ensure corners etc. are right). By using AI to align each brick and control the pattern, such robots achieve high precision. Similarly, robotic welding arms in construction can weld steel beams or pipes continuously without fatigue. These relieve human welders from long hours in difficult positions, letting them supervise multiple units or handle only the tricky parts.

In maintenance and field work, augmented reality (AR) combined with AI is assisting technicians. A mechanic wearing AR glasses might see an overlay on an engine that labels parts and highlights the likely faulty component based on an AI diagnosis. Companies have developed AI diagnostic tools where a worker can hold up a smartphone to a machine and an app listens to the machine’s sounds or reads sensor data to predict if a part is failing. For example, an elevator technician could use an AI app that listens to the motor and immediately flags if any component is likely worn out, referencing thousands of sound profiles of healthy vs faulty motors. This predictive maintenance powered by AI helps general laborers fix issues before a breakdown.

Worker safety is also improved by AI. On construction sites, computer vision can monitor whether workers are wearing helmets and safety vests, or alert if someone enters a dangerous area (like under a suspended load). Drones patrolling a site can use AI to spot hazards from above (e.g., a missing guard rail on scaffolding) and instantly notify supervisors. According to industry data, companies using AI-driven safety monitoring have seen accident rates drop significantly (one report noted up to a 25% reduction in workplace injuries by identifying risky situations early). For miners or oil field workers, wearable sensors with AI can detect signs of fatigue or dangerous gas levels in the environment and send alarms.

In logistics and trucking, while fully autonomous trucks are still being tested, there are intermediate steps: platooning(where a convoy of trucks uses AI to follow a lead truck closely to save fuel), or semi-autonomous features that reduce driver strain (like auto-braking, lane-keeping on highways, etc.). Warehouse vehicles (forklifts, pallet movers) are increasingly autonomous; a single operator might oversee multiple autonomous forklifts that move materials around a depot guided by AI routing algorithms.

For agriculture, which is a type of skilled/manual labor (farmers, growers), AI and robotics are revolutionizing tasks like planting, weeding, and harvesting. Robots with AI vision can identify ripe fruit and pick it, or distinguish weeds from crops and eliminate them with laser or mechanical tools – reducing the need for herbicides and manual weeding. Tractors are being equipped with GPS and AI to autonomously plow fields or plant seeds with precision down to the inch (precision agriculture). Drones scan fields and use AI to detect pest infestations or nutrient deficiencies via imaging, helping farmers make targeted interventions (like only spraying where needed). These technologies help tackle labor shortages in agriculture and can improve yield by optimizing care for each portion of field individually.

For everyday home maintenance and homemakers: robotic vacuum cleaners (Roombas and the like) have been around, but newer ones use AI vision to avoid obstacles (they won’t choke on socks or cords now) and map rooms more efficiently. By 2025, we’ll have even smarter home robots that can do multi-step chores – for instance, a robot that vacuums, then switches to mopping, and even takes itself to empty the dustbin. There are prototype laundry-folding robots (though slow and expensive right now), which future iterations might speed up. Smart home AI acts like a home manager: learning your routines to adjust thermostats and lights, ordering groceries when staples run low (via connected fridge sensors or pantry scanners), and even cooking. Yes, kitchen robots are in development – like robotic arms that can cook simple meals or AI-enabled appliances that automatically adjust settings for perfect cooking (smart ovens that recognize the food and cook it exactly right). These technologies aim to reduce the manual effort of home-making tasks, effectively giving everyone some of the benefits of domestic help.

One interesting advancement is humanoid helper robots. Companies like 1X Technologies (backed by OpenAI) and Tesla (with their Optimus robot) are building human-sized robots that can potentially do various household or factory tasks. 1X’s NEO robot, unveiled in 2024, is designed for home use to help with chores and even provide companionship. It looks like a human torso on wheels or legs and can manipulate objects. While these are still early-stage, the fact that multiple firms are investing in general-purpose robots suggests that within 5–10 years we might see them in controlled environments (perhaps as helpers in eldercare facilities or doing simple tasks in homes of early adopters). By one estimate, up to 40% of household chores could be automated by 2033 – including cleaning, laundry, and cooking – thanks to advancements in robotics and AI.

For general laborers like those in warehouses, construction, cleaning, etc., some job displacement is a concern, but in the near term it’s often a shift in role. A construction worker might become a robot operator or maintenance person rather than the one swinging the hammer all day. It changes the skill set – more tech management, less brute labor. The positive side is reduced risk and physical strain for workers. The negative is some might need significant retraining to adapt, and not everyone will find it easy to move into the new roles. Society will have to manage this transition, possibly through vocational training programs that teach workers how to work with and maintain these new machines.

In manufacturing, we’ve seen this pattern for decades – robots did the welding and painting, humans moved to supervision, quality control, or tasks that were too tricky to automate. The difference now is AI is expanding what tasks are “automatable” beyond repetitive, highly structured ones. Flexibility is the keyword: robots historically couldn’t deal with change, but AI is giving them a form of adaptability (like recognizing different parts). So the range of tasks they can do is broader.

However, not all skilled labor will be replaced. Trades like electricians or plumbers involve complex problem solving in unpredictable environments – an AI robot might not easily crawl through an attic and reroute wiring among unforeseen obstacles, at least not soon. What will happen though is these tradespeople will use AI tools: smart diagnostic devices to locate a wiring fault behind walls without tearing everything open, AR glasses that show pipe layouts and the next step in an installation procedure as they work, and apps that automatically quote a job by analyzing photos of the site.

Exoskeletons are another innovation for manual labor. These are wearable robots that assist human movement, reducing fatigue and risk of injury. AI controls help them move naturally with the worker. By 2025, some warehouses and factories will likely have workers wearing exoskeleton vests to help lift heavy items effortlessly. They won’t turn people into supermen, but even a modest boost means a worker can lift 50 lbs repetitively with much less strain.

In summary, the world of skilled and manual work is becoming a collaboration between human muscle & know-how and AI-driven machinery. This can lead to productivity leaps – one estimate suggests construction, an industry known for stagnating productivity, could see big gains with AI and robotics, as up to 30% of work becomes automated by mid-decade. For workers, it means fewer backbreaking tasks and hopefully fewer accidents, but also learning to work with high-tech tools. It’s crucial that this sector’s workforce gets adequate training to transition; otherwise we risk leaving some workers behind. Historically, technology replacing manual work has led to new, often better jobs (fewer farm laborers but more manufacturing jobs in the 20th century, for example). The hope is similarly, AI and robotics will create new roles – robot maintenance, logistics optimization, etc. – that employ those same people or the next generation in even more productive ways.

Home and Daily Life (Homemakers, Housewives, General Consumers)

AI’s influence extends into our homes and everyday routines, often in subtle but transformative ways. For those who manage households (whether homemakers or just anyone running their own home life), AI can act like a personal assistant and labor-saving device rolled into one. Already, many people interact with AI through voice assistants like Alexa, Google Assistant, or Siri. These are becoming smarter concierges that handle an expanding list of chores: from controlling appliances (vacuum the living room, start the washing machine) to managing schedules (reminding about kids’ school events or when to water the plants) and even offering basic companionship (engaging in casual conversation or playing one’s favorite music based on mood).

Smart appliances are a tangible manifestation of AI at home. Modern refrigerators can identify items inside and ping your phone when you’re low on milk (and even suggest recipes based on what’s left). Some can communicate with online grocery services to simplify reordering staples. AI ovens recognize what you put in (via camera) and automatically set the correct time and temperature – for example, detecting a chicken vs. a pizza and adjusting accordingly. As these appliances learn from your usage, they might start to anticipate needs: e.g., the coffee machine prepares a latte when it knows you usually have one at 7 AM on Saturdays.

Cleaning and maintenance tasks are increasingly offloaded. Robot vacuums and mops have grown more reliable thanks to AI navigation. They map the house, avoid obstacles (identifying them with onboard cameras and AI – no more smeared pet accidents), and even coordinate with each other (one vacuums then another mops). Window-cleaning robots and pool-cleaning robots also use AI to maximize coverage without falling off edges. In a few years, we may have general cleaning robots that can tidy up – picking up objects and putting them in their place – but this is harder (it requires advanced manipulation). However, telepresence robots combined with AI might let a remote human supervise a robot to do these kinds of tasks occasionally, effectively outsourcing chores. Meanwhile, simpler bots like automated lawnmowers are becoming common – they stay within virtual boundaries and keep the grass trim without human effort.

Home management AI helps with the cognitive load of running a household. Budgeting apps use AI to analyze spending and recommend ways to save, acting like a financial advisor for the family. Meal-planning apps can suggest a week’s menu that fits your dietary preferences, then compile a shopping list (or directly order the groceries for delivery). For busy parents, an AI scheduler can coordinate everyone’s calendars, finding optimal times for appointments or suggesting carpool arrangements by comparing schedules with other parents (with privacy controls). If an appliance is acting up, AI chatbots from manufacturers can guide the user through troubleshooting and even initiate a service call if needed by directly notifying a technician with the diagnostics.